lucene(20)—lucene 综合应用实例

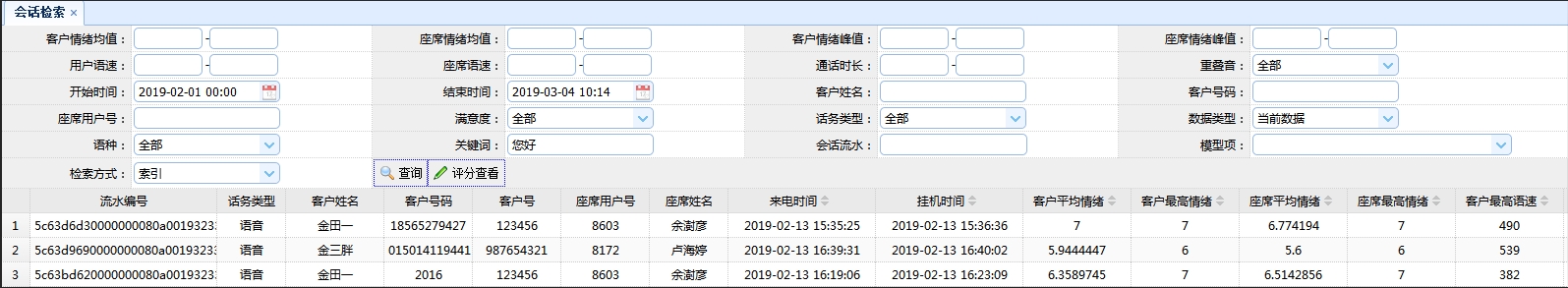

最近因项目需求的需要,完成一个”会话检索”功能。该功能是把录音转写成文字,对转写后的文本进行关键字检索。因为该功能对检索条件类型的使用比较完整(例如:时间范围、关键字、语速等不同类型)以及使用的注意点也比较多,所以在这里给大家分享一下。希望可以帮到你。

功能说明

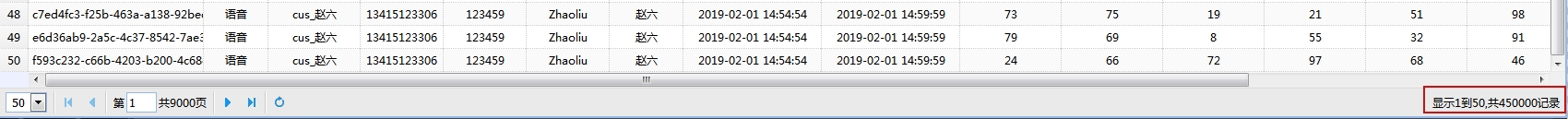

会话检索,支持 多个文件夹同时检索,支持的索引大小为 1300 MB 左右(大约是45万条数据),支持 多个条件进行 and 检索。

功能依赖

lucene 使用的版本是 5.5.3 ,相对来说还是比较老的,当前最新的版本是 7.7.0 。

|

|

实现过程

编码过程

分页处理类 Page

|

|

会话记录 IQCConversationInfoBean 实体类

|

|

lucene 工具类 LuceneUtils

|

|

service

|

|

检索流程

根据关键词解析(queryParser)出查询条件query(Termquery),利用检索工具(indexSearcher)去索引库获取文档的id,然后再根据文档 id去文档信息库获取文档信息。

分词器不同,建立的索引数据就不同;比较通用的一个中文分词器IKAnalyzer的用法。

结果展示

注意事项

使用多线程。在使用多线程时,只需要创建线程池即可。事实上,Lucene 在 IndexSearcher 中 判断是否有 executor ,如果 IndexSearcher 有 executor ,则会由每个线程控制一部分索引的读取,而且查询的过程采用的是 future 机制,这种方式是边读边往结果集里边追加数据,这样异步处理机制提升了效率。具体源码可看 IndexSearcher 的 search。

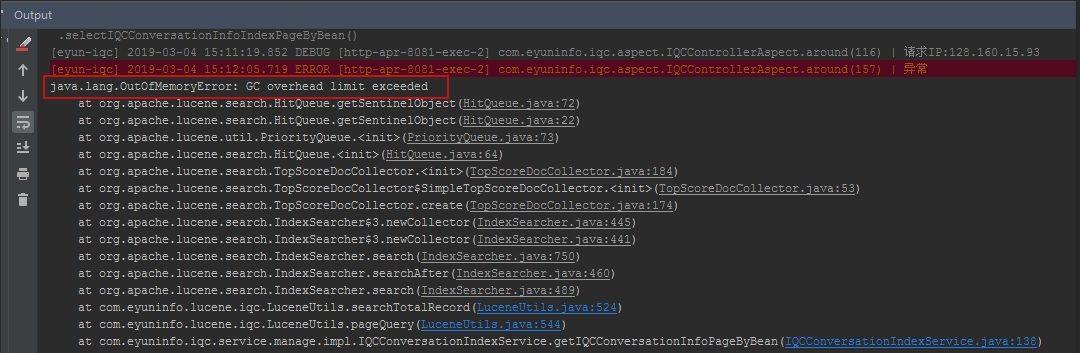

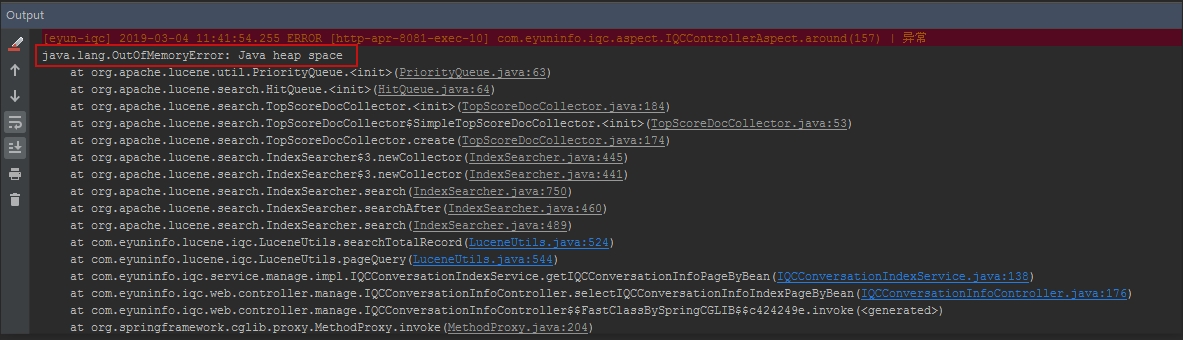

控制检索文件夹。如果同时检索的文件夹太多的时,会增加 GC 负担

在你能承受的范围内设置更多的内存。以免造成内存溢出

总结

本文是对 Lucene 多条件检索的记录。实现多目录多线程的检索方式;实现分页功能;实现多种类型的条件查询以及数据量较大时检索的注意点进行记录。为了更好的使用 Lucene 后面将总结如何提高 Lucene 的检索效率。

全文检索,lucene 在 匹配效果、速度和效率是极大的优于数据库的。